Before I go on with my portfolio, I want to tribute to my idol

"An idiot admires complexity, a genius admires simplicity"

-Terry A. Davis

Here are some of the technologies and tools I use to build and maintain reliable systems.

I use Docker to containerize applications, ensuring consistency across development and production environments.

I enhance my proficiency in Bash by working on lightweight projects hosted on remote environments.

During my previous internship, I used Elasticsearch to report logs of suspicious, fraud-related transactions to legal counsels.

I have experience with Java and have worked with the Spring Framework to develop scalable and maintainable backend applications.

I use Python both for writing scripts and for developing machine learning projects, allowing me to quickly prototype and solve data-driven problems.

I have a solid understanding of SQL, with extensive experience using PostgreSQL for database management and optimization in various projects.

Actually I am currently learning AWS, and I'm enrolled in an official course provided by Amazon to deepen my understanding of cloud computing and services.

I can hear you saying "duh".

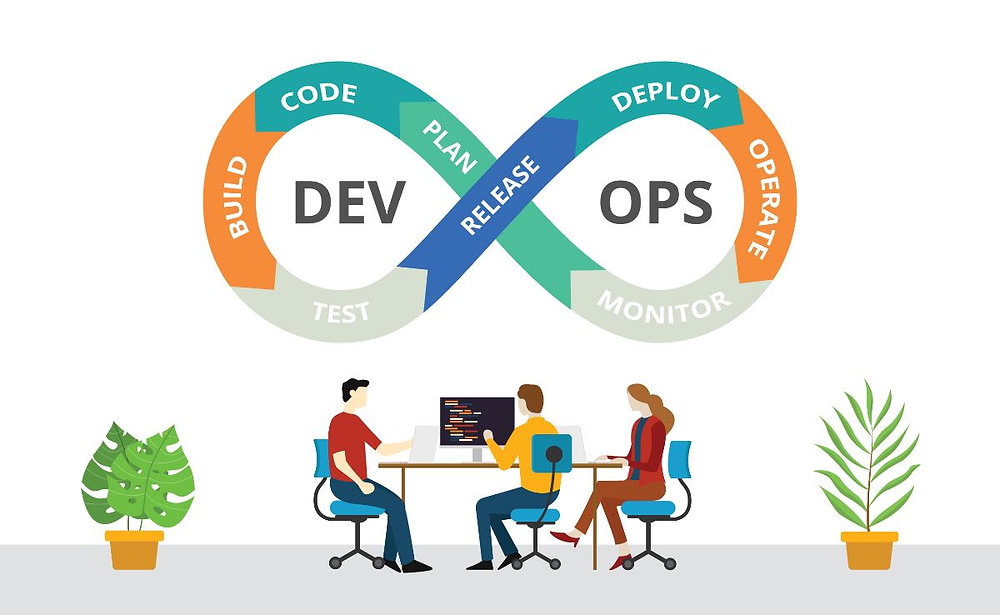

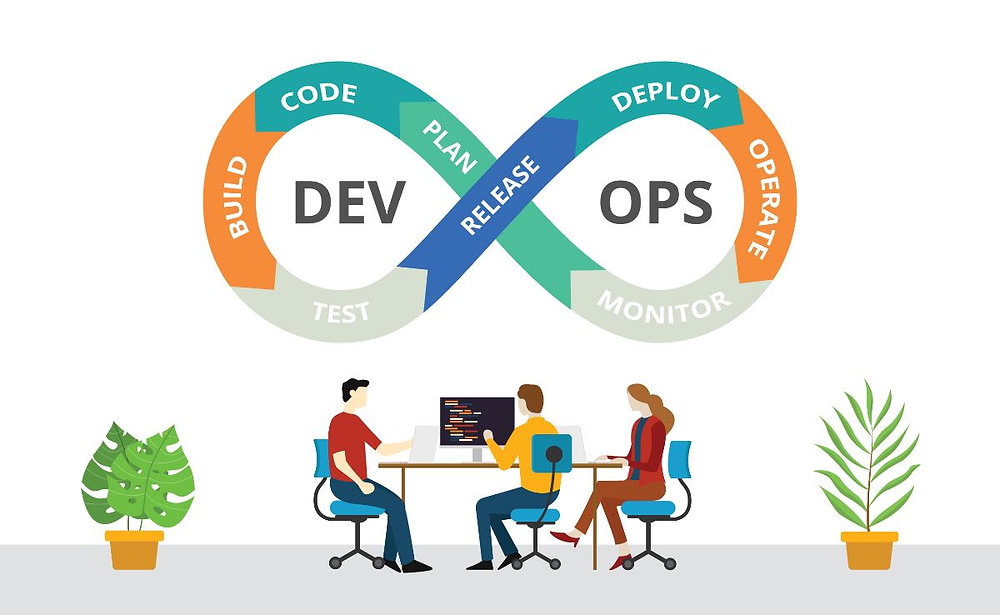

Gained hands-on experience by setting up and managing an Ubuntu remote server. Developed and practiced playbooks to automate configurations and deployments, enhancing my understanding of infrastructure as code (IaC) and DevOps principles.

I started to write blogs on Medium, want to take a look?

Since there isn't any Turkish documentation about it anywhere (at least I couldn't find it), I decided to write one. Wrote about how to reduce image size, build time, etc. And also tried to eliminate some confusion on cache busting with apt-get update command.

I tried to explain these concepts with a little bonus at the end. Since they all work as intermediaries, it is important to understand how they work profoundly to be aware of the differences.

In this post, I explored file permissions and their octal representation in UNIX and UNIX-like systems. To add context, I looked back at the early days of UNIX and the computing limitations of that era. My goal was to explain not just how permission bits work, but why they were designed that way — including a brief look at number systems and the practical needs of early operating systems.

In this post, I dive into two fundamental Linux kernel features — namespaces and cgroups — which together form the backbone of modern container technologies like Docker. I aimed to explain how these mechanisms provide process isolation and resource control, enabling containers to run as lightweight, secure, and efficient environments.

Here are some of my selected projects!

Developed a software called 'Employee Attrition Predictor,' which predicts whether employees will maintain their attendance based on parameters such as marital status, education, and other factors using a machine learning model developed in Python, with the backend built using Java Spring Framework.

A blog project backend developed with .NET framework during my internship at NTT Data Business Solutions. The project involved implementing core functionalities such as user authentication, post creation, and editing with a focus on writing clean, maintainable code and following best practices in backend development.

Integrated new transformer-based topic modeling and sentiment analysis models into our web application, Bilgi AILab, where researchers can upload their data and leverage various NLP models. We also fine-tuned models with Turkish language support to detect a wide range of emotions such as happiness, anger, fear, surprise, and sadness, enabling emotion-aware and multilingual text analysis.

Developed a customer sentiment analysis project that analyzes comments from Trendyol product pages via web scraping. The backend was built with Python (FastAPI, Selenium) and Ngrok for tunneling, while the frontend was created using Kuika's low-code platform during an AI hackathon.

Developed an NLP-based project to classify emails as spam or not, leveraging five different machine learning models. Each model was fine-tuned and evaluated using three distinct datasets to ensure robustness and generalizability. The project focused on model comparison to determine the most effective approach for spam detection.